How does a machine learning model perceive the world around it? Deep neural networks (DNNs) are capable of increasingly human-like capabilities, from recognizing symbols in images to understanding spoken language and playing complex strategy games. But in practice, these models are still far from replicating many human abilities.

In a paper published in February in Open Mind: Discoveries in Cognitive Science, Nancy Kanwisher, Walter A. Rosenblith Professor of Cognitive Neuroscience in the Department of Brain and Cognitive Sciences (BCS) and colleagues sought to confront one of these discrepancies through their research into human and machine perception. Their paper, “Approximating human-level 3D visual inferences with deep neural networks” was co-authored by Professor Josh Tenenbaum (also of BCS), Assistant Professor Vincent Sitzmann from the Department of Electrical Engineering & Computer Science, Assistant Professor Ayush Tewari of Cambridge University, and research scientists Thomas O’Connell, Tyler Bonnen, and Yoni Friedman. The paper examines the limitations of current AI models in understanding 3D shapes and explores how new approaches can bring machines closer to human-like perception.

Studies show that unlike humans—who can look at a 3D object and understand its shape—DNNs have issues with tasks that require 3D understanding. While a DNN can accurately classify objects in an image or recognize faces with precision, they often struggle to infer the underlying 3D shape of objects, failing in scenarios where spatial reasoning is crucial. The research team wanted to understand why—and how scientists might approach closing that gap in perceptive capabilities to better understand both human and machine perception. Using multiple models, they collected data based on the models’ architecture, its testing data, and the testing methods to gauge what factors contribute most to a DNN’s ability to perceive objects in space.

The research team started by adjusting the models’ perspectives, comparing their abilities to recognize 3D objects from a single viewpoint to their performance when given multiple viewpoints. The models performed much better with varied viewpoints, allowing them to more accurately approximate human understanding of 3D objects. They performed still better if they’d seen the test object previously.

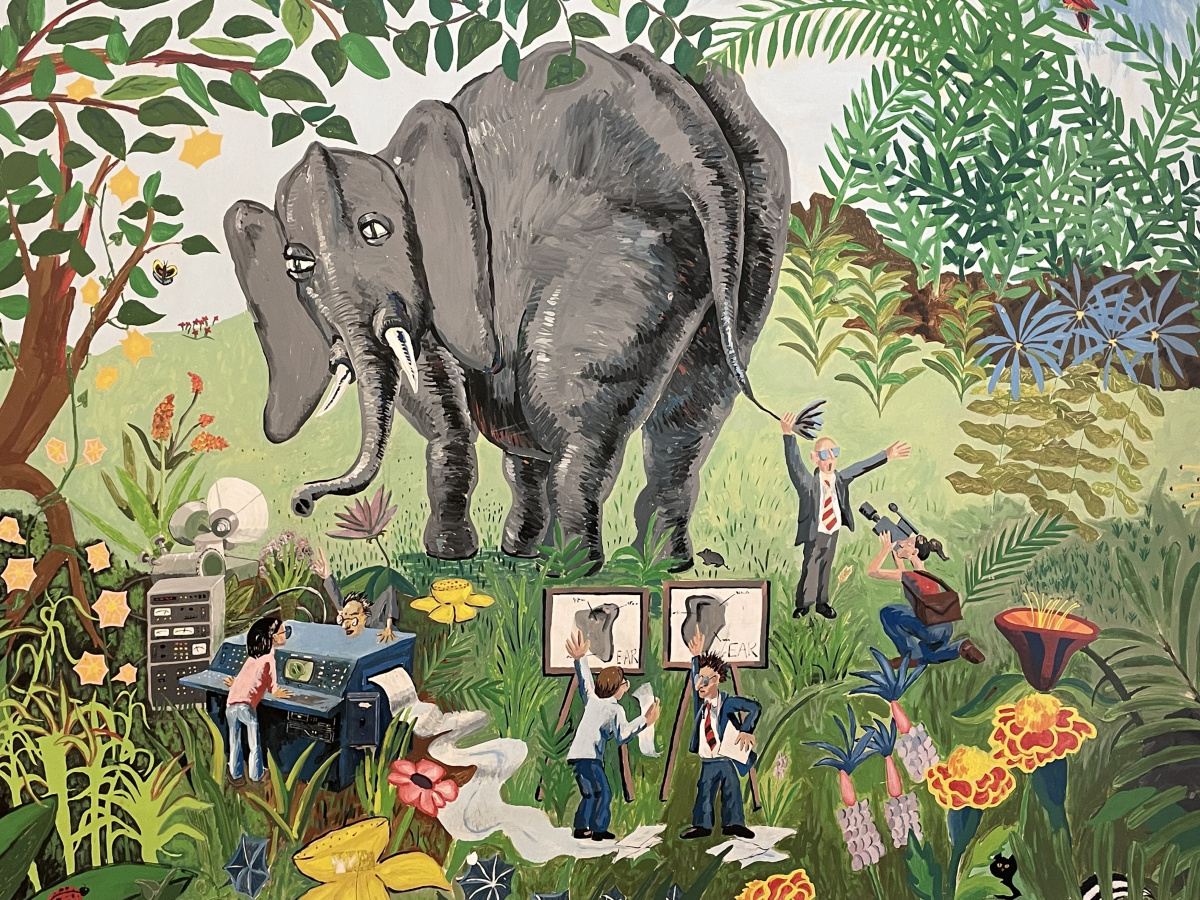

In the famous allegory, it takes six blind men working together to identify an elephant by touch alone. By combining their multiple perspectives, they are able to reach a reliable conclusion together—much like a deep neural network’s ability to identify a 3D object when given multiple viewpoints. This mural, located in a hallway leading to MIT’s Hayden Library, presents an interpretation of the story with scientists at the helm, working together to enhance their collective understanding.

Lead research scientist on the project Thomas O’Connell said on their testing: “I think the biggest surprise as we were doing this research was the degree to which the DNN architecture had a much smaller impact on alignment to human behavior than the DNN training diet and procedures.” Certain specialized models have been developed with the express purpose of more accurately capturing 3D structures, and while those models performed highly in their tests, the research team found that changing the testing conditions for less specialized architectures dramatically increased their efficacy. “We were struck by how much the alignment between standard convolutional networks and behavior was improved simply by providing the model with multiple views of the same object,” O’Connell noted, adding that “As we completed this work and since, there have been several other concurrent works coming to the same conclusion: that the main factor driving DNN to human alignment is the type of data the DNN sees during training and the training objective for the model, rather than the structure of the architecture.”

Kanwisher and O’Connell predict that this research can be applied to more disciplines than just machine learning. “In computer science,” they note, “evaluating the success of specialized 3D architectures is an essential step to confirm a model is indeed an advancement from others that have come before.” And Kanwisher and O’Connell are already in the process of conducting neuroscientific research based on their findings: if a DNN is aligned with a particular human behavior, “that naturally leads to the question of where in the brain that behavior is being computed.” They encourage researchers to explore new approaches—like neural and behavioral constraints or probabilistic programming—to further this research and to build models that see the world as humans do.