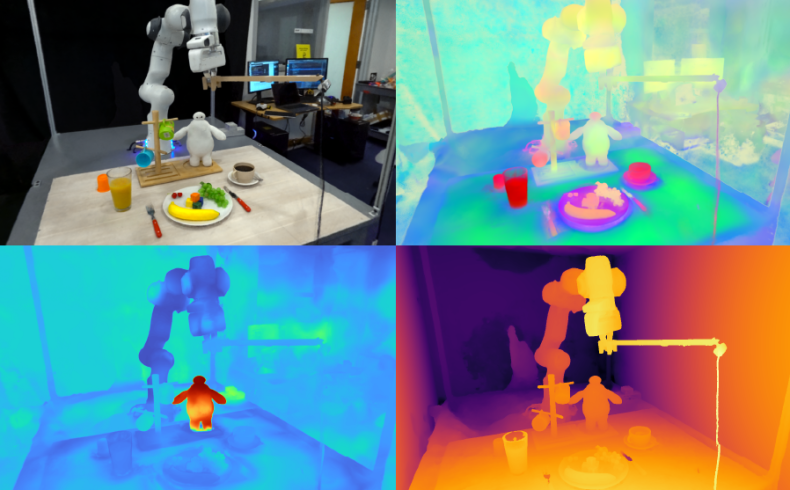

By blending 2D images with foundation models to build 3D feature fields, a new MIT method helps robots understand and manipulate nearby objects with open-ended language prompts.

Inspired by humans' ability to handle unfamiliar objects, a group from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) designed Feature Fields for Robotic Manipulation (F3RM), a system that blends 2D images with foundation model features into 3D scenes to help robots identify and grasp nearby items. F3RM can interpret open-ended language prompts from humans, making the method helpful in real-world environments that contain thousands of objects, like warehouses and households.